Lustre Object Storage Service (OSS): Difference between revisions

No edit summary |

No edit summary |

||

| Line 3: | Line 3: | ||

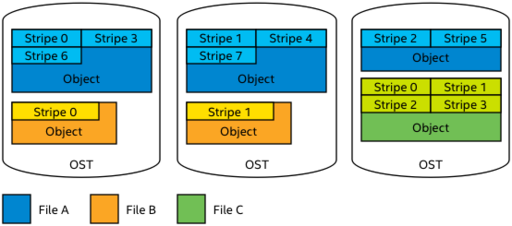

Files in Lustre are composed of one or more OST objects, in addition to the metadata inode. The allocation of objects to a file is referred to as the file’s layout, and is determined when the file is created. The layout for a file defines the set of OST objects that will be used to hold the file’s data. Each object for a given file is held on a separate OST, and data is written to the objects in a round-robin allocation as a stripe. As an analogy, one can think of the objects of a file as virtual equivalents of disk drives in a RAID-0 storage array. Data is written in fixed-sized chunks in stripes across the objects. The stripe width is also configurable when the file is created, and defaults to 1MiB. | Files in Lustre are composed of one or more OST objects, in addition to the metadata inode. The allocation of objects to a file is referred to as the file’s layout, and is determined when the file is created. The layout for a file defines the set of OST objects that will be used to hold the file’s data. Each object for a given file is held on a separate OST, and data is written to the objects in a round-robin allocation as a stripe. As an analogy, one can think of the objects of a file as virtual equivalents of disk drives in a RAID-0 storage array. Data is written in fixed-sized chunks in stripes across the objects. The stripe width is also configurable when the file is created, and defaults to 1MiB. | ||

<div id="Figure | <div id="Figure 2-3-1"> | ||

[[File:Object Storage Simple Stripe File Layout lowres v1.png|thumb|center| | [[File:Object Storage Simple Stripe File Layout lowres v1.png|thumb|center|512px| | ||

Figure | Figure 2-3-1. Files are written to one or more objects stored on OSTs in a stripe pattern]] | ||

</div> | </div> | ||

[[#Figure | [[#Figure 2-3-1|Figure 2-3-1]] shows some examples of file with different storage layouts. File A is striped across 3 objects, File B comprises 2 objects and File C is a single object. The number of objects and the size of the stripe is configurable when the file is created. | ||

The object layout specification for a file can be supplied directly by the user or application, otherwise it will be assigned by the MDT based on either the file system default layout, or by a layout policy defined for the directory in which the file has been created. The MDT is responsible for the assignment of objects to a file. | The object layout specification for a file can be supplied directly by the user or application, otherwise it will be assigned by the MDT based on either the file system default layout, or by a layout policy defined for the directory in which the file has been created. The MDT is responsible for the assignment of objects to a file. | ||

| Line 20: | Line 20: | ||

Each OST operates independently of all other OSTs in the file system and there are no dependencies between objects themselves (a file may comprise multiple objects but the objects themselves don’t have a direct relationship). This, along with a flat namespace structure for objects, allows the performance of the file system to scale linearly as more OSTs are added. | Each OST operates independently of all other OSTs in the file system and there are no dependencies between objects themselves (a file may comprise multiple objects but the objects themselves don’t have a direct relationship). This, along with a flat namespace structure for objects, allows the performance of the file system to scale linearly as more OSTs are added. | ||

<div id="Figure | <div id="Figure 2-3-2"> | ||

[[File:OSS Scaling lowres v1.png|thumb|center| | [[File:OSS Scaling lowres v1.png|thumb|center|512px| | ||

Figure | Figure 2-3-2. Performance and Capacity Scale with Linearly with Increasing Numbers of OSSs and OSTs]] | ||

</div> | </div> | ||

| Line 31: | Line 31: | ||

As for other Lustre server components, object storage servers can be combined into high availability configurations, and this is the normal practice for Lustre file system implementations. Unlike the metadata and management services, object storage servers are only grouped with other object storage servers when designing for HA. | As for other Lustre server components, object storage servers can be combined into high availability configurations, and this is the normal practice for Lustre file system implementations. Unlike the metadata and management services, object storage servers are only grouped with other object storage servers when designing for HA. | ||

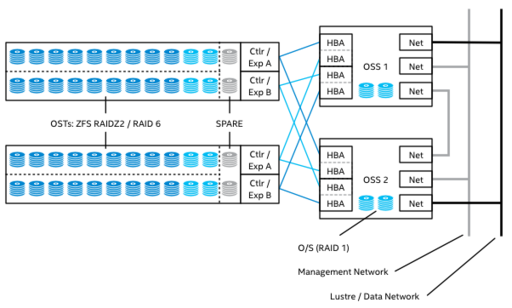

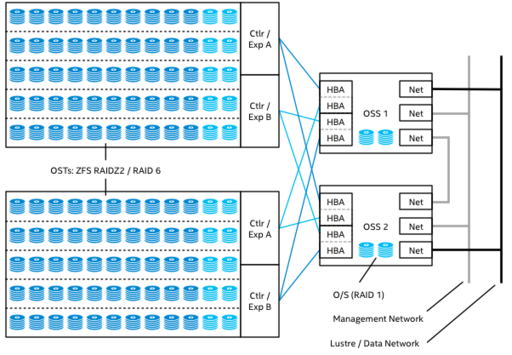

The physical design of a typical OSS building block is very similar to that of the MDS. The most significant external difference is the storage configuration, which is typically designed to maximize capacity and read/write bandwidth. Storage volumes are commonly formatted for RAID 6, or in the case of ZFS, RAID-Z2. This provides a balance between optimal capacity and data integrity. The example in [[#Figure | The physical design of a typical OSS building block is very similar to that of the MDS. The most significant external difference is the storage configuration, which is typically designed to maximize capacity and read/write bandwidth. Storage volumes are commonly formatted for RAID 6, or in the case of ZFS, RAID-Z2. This provides a balance between optimal capacity and data integrity. The example in [[#Figure 2-3-3a|Figure 2-3-3a]] is a low-density configuration with only 48 disks across two enclosures. It is more common to see multiple high-density storage enclosures with 60 disks, 72 disks, or more attached to object storage servers, an example of which is shown in [[#Figure 2-3-3b|Figure 2-3-3b]]. | ||

A typical building block configuration will comprise two OSS hosts connected to a common pool of shared storage. This storage may be a single enclosure or several, depending on the requirements of the implementation: a site may wish to optimize the Lustre installation for throughput performance, choosing lower capacity, high performance storage devices attached to a relatively high number of servers, or it may place an emphasis on capacity over throughput with high density storage connected to relatively fewer servers. Lustre is very versatile and affords system architects flexibility in designing a solution appropriate to the requirements of the site. | A typical building block configuration will comprise two OSS hosts connected to a common pool of shared storage. This storage may be a single enclosure or several, depending on the requirements of the implementation: a site may wish to optimize the Lustre installation for throughput performance, choosing lower capacity, high performance storage devices attached to a relatively high number of servers, or it may place an emphasis on capacity over throughput with high density storage connected to relatively fewer servers. Lustre is very versatile and affords system architects flexibility in designing a solution appropriate to the requirements of the site. | ||

<div id="Figure | <div id="Figure 2-3-3a"> | ||

[[File:Object Storage Server HA Cluster small lowres v1.png|thumb|center| | [[File:Object Storage Server HA Cluster small lowres v1.png|thumb|center|512px| | ||

Figure | Figure 2-3-3a. Object Storage Server HA building block with 4 OSTs]] | ||

</div> | </div> | ||

<div id="Figure | <div id="Figure 2-3-3b"> | ||

[[File:Object Storage Server HA Cluster large lowres v1.png|thumb|center| | [[File:Object Storage Server HA Cluster large lowres v1.png|thumb|center|512px| | ||

Figure | Figure 2-3-3b. Object Storage Server HA building block with 10 OSTs]] | ||

</div> | </div> | ||

| Line 57: | Line 57: | ||

Each OST zpool must contain a minimum of one RAIDZ2 vdev, but may contain many, creating a stripe of RAIDZ2 vdevs. This arrangement, analogous to a RAID 6+0 layout for non-ZFS storage, can yield good performance for ZFS when compared to a pool containing a single RAIDZ2 vdev. Again, experimentation is essential in order to drive the best performance from the hardware. | Each OST zpool must contain a minimum of one RAIDZ2 vdev, but may contain many, creating a stripe of RAIDZ2 vdevs. This arrangement, analogous to a RAID 6+0 layout for non-ZFS storage, can yield good performance for ZFS when compared to a pool containing a single RAIDZ2 vdev. Again, experimentation is essential in order to drive the best performance from the hardware. | ||

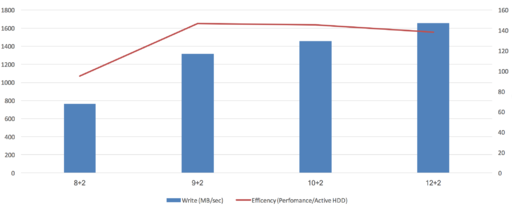

In theory, when determining the best configuration for performance, the same layout used for LDISKFS RAID6 (namely 10 disks, 8+2) should also apply to a ZFS RAIDZ2 vdev, but this is not always the case. While LDISKFS OSDs with RAID-6 usually require 8+2 (or some other power of 2 layout) for best performance, ZFS + RAID-Z2 is much more flexible and the RAID geometry should be chosen to best match the JBOD enclosure (also taking spare devices into account). For example, a benchmarking study conducted by Intel® Corporation ([[#Figure | In theory, when determining the best configuration for performance, the same layout used for LDISKFS RAID6 (namely 10 disks, 8+2) should also apply to a ZFS RAIDZ2 vdev, but this is not always the case. While LDISKFS OSDs with RAID-6 usually require 8+2 (or some other power of 2 layout) for best performance, ZFS + RAID-Z2 is much more flexible and the RAID geometry should be chosen to best match the JBOD enclosure (also taking spare devices into account). For example, a benchmarking study conducted by Intel® Corporation ([[#Figure 2-3-4|Figure 2-3-4]]) found that using an 11- or 12-disk RAIDZ2 vdev configuration can yield better overall throughput results than a 10-disk allocation, particularly when working with large I/O block sizes. This data is based on ZFS version 0.6.5, and it is to be expected that as ZFS development continues, performance characteristics will continue to improve for different configurations. | ||

Experimenting with different RAIDZ layouts, such as using 11 or 12 disks for RAIDZ2 as well as the more conventional or traditional 10 disk configuration, is therefore recommended in order to identify the optimum structure for achieving strong performance. | Experimenting with different RAIDZ layouts, such as using 11 or 12 disks for RAIDZ2 as well as the more conventional or traditional 10 disk configuration, is therefore recommended in order to identify the optimum structure for achieving strong performance. | ||

<div id="Figure | <div id="Figure 2-3-4"> | ||

[[File:ZFS Pool Performance and HDD Efficiency for Different RAIDZ2 Layouts v1.png|thumb|center| | [[File:ZFS Pool Performance and HDD Efficiency for Different RAIDZ2 Layouts v1.png|thumb|center|512px| | ||

Figure | Figure 2-3-4. Performance and HDD efficiency for different ZFS pool’s layout. | ||

<br/><br/> | <br/><br/> | ||

'''Note:''' <code>obdfilter-survey</code> write benchmark on one single OST using 8 objects and 64 threads. Comparison of 4 different ZFS pool layouts: 8+2, 9+2, 10+2 and 12+2. The primary Y axis measures global performance, the secondary Y axis measures the efficiency of a single disk, using only the active disks (8/9/10/12) for the calculation. The test was performed on a 60-HDD JBOD.]] | '''Note:''' <code>obdfilter-survey</code> write benchmark on one single OST using 8 objects and 64 threads. Comparison of 4 different ZFS pool layouts: 8+2, 9+2, 10+2 and 12+2. The primary Y axis measures global performance, the secondary Y axis measures the efficiency of a single disk, using only the active disks (8/9/10/12) for the calculation. The test was performed on a 60-HDD JBOD.]] | ||

Revision as of 21:35, 7 September 2017

The Object Storage Servers (OSS) in a Lustre file system provide the bulk data storage for all file content. Each OSS provides access to a set of storage volumes referred to as Object Storage Targets (OSTs) and each object storage target contains a number of binary objects representing the data for files in Lustre.

Files in Lustre are composed of one or more OST objects, in addition to the metadata inode. The allocation of objects to a file is referred to as the file’s layout, and is determined when the file is created. The layout for a file defines the set of OST objects that will be used to hold the file’s data. Each object for a given file is held on a separate OST, and data is written to the objects in a round-robin allocation as a stripe. As an analogy, one can think of the objects of a file as virtual equivalents of disk drives in a RAID-0 storage array. Data is written in fixed-sized chunks in stripes across the objects. The stripe width is also configurable when the file is created, and defaults to 1MiB.

Figure 2-3-1 shows some examples of file with different storage layouts. File A is striped across 3 objects, File B comprises 2 objects and File C is a single object. The number of objects and the size of the stripe is configurable when the file is created.

The object layout specification for a file can be supplied directly by the user or application, otherwise it will be assigned by the MDT based on either the file system default layout, or by a layout policy defined for the directory in which the file has been created. The MDT is responsible for the assignment of objects to a file.

Objects for a file are created when the file is created (although for efficiency, the MDT will pre-create a pool of zero-length objects on the OSTs, ready for assignment to files as they are created). Objects are initially empty when created and all objects for a file are created when the file itself is created; objects are not allocated dynamically as data is written. This means that if a file is created with a stripe count of 4 objects, all 4 of the objects will be allocated to the file, even if the file is initially empty, or has less than one stripe’s worth of data.

Each object storage server can host several object storage targets, typically in the range 2-8 OSTs per active server, but sometimes more. There can be many object storage servers in a file system, scaling up to hundreds of servers. Each OST can be several tens of terabytes in size, and a typical OSS might serve anywhere from 100-500TB of usable capacity, depending on the storage configuration.

The OSS population therefore determines the bandwidth and overall capacity of a Lustre file system. A single file system instance can theoretically scale to 1 Exabyte of available capacity using ZFS (EXT4/LDISKFS can scale to 512PB) across hundreds of servers, and there are supercomputer installations with 50PB or more of online capacity in a single file system instance. In terms of throughput performance, there are sites that have measured sustained bandwidth in excess of 1TB/sec.

Each OST operates independently of all other OSTs in the file system and there are no dependencies between objects themselves (a file may comprise multiple objects but the objects themselves don’t have a direct relationship). This, along with a flat namespace structure for objects, allows the performance of the file system to scale linearly as more OSTs are added.

While there is no strict technical limitation that prevents OSTs from different file systems from being accessed from a common object storage server, it is not a common or recommended practice. As a rule, each OSS should be associated with a single Lustre file system. This simplifies system planning and maintenance, and makes the environment more predictable with regard to performance. A similar rule applies when working with high-availability configurations.

Object Storage Server Building Blocks

As for other Lustre server components, object storage servers can be combined into high availability configurations, and this is the normal practice for Lustre file system implementations. Unlike the metadata and management services, object storage servers are only grouped with other object storage servers when designing for HA.

The physical design of a typical OSS building block is very similar to that of the MDS. The most significant external difference is the storage configuration, which is typically designed to maximize capacity and read/write bandwidth. Storage volumes are commonly formatted for RAID 6, or in the case of ZFS, RAID-Z2. This provides a balance between optimal capacity and data integrity. The example in Figure 2-3-3a is a low-density configuration with only 48 disks across two enclosures. It is more common to see multiple high-density storage enclosures with 60 disks, 72 disks, or more attached to object storage servers, an example of which is shown in Figure 2-3-3b.

A typical building block configuration will comprise two OSS hosts connected to a common pool of shared storage. This storage may be a single enclosure or several, depending on the requirements of the implementation: a site may wish to optimize the Lustre installation for throughput performance, choosing lower capacity, high performance storage devices attached to a relatively high number of servers, or it may place an emphasis on capacity over throughput with high density storage connected to relatively fewer servers. Lustre is very versatile and affords system architects flexibility in designing a solution appropriate to the requirements of the site.

Object Storage Server Design Considerations

OSS servers are throughput-oriented systems, and benefit from large memory configurations and a large number of cores for servicing Lustre kernel threads. There is less emphasis on the raw clock speed per core because the workloads are not typically oriented around IOps. Throughput bandwidth is the dominant factor in designed object storage server hardware. This follows through to the storage hardware as well, which will typically comprise high-capacity, high-density enclosures. Flash storage is currently less prevalent for object storage due to the higher cost-per-terabyte relative to disk, although it is reasonably clear that at some point this will change in favour of flash.

Storage volumes are typically configured as RAID 6 volumes for LDISKFS OSDs, and RAIDZ2 for ZFS OSDs. The volumes are usually configured in anticipation of a 1MiB I/O transaction because this is the default unit used by Lustre for all I/O. For a RAID 6 volume, this means creating a disk arrangement that can accommodate a 1MiB full stripe write without any read-modify-write overhead. Typically this means arranging the RAID 6 volumes in layouts where the number of “data disks” is a power of 2, plus the 2 parity disks. (Parity is distributed in RAID 6, so this is not an accurate representation of the data layout on the storage, but the idea is to describe the amount of usable capacity in terms of the equivalent number of disks in the RAID 6 volume.)

The most common RAID 6 layout for LDISKFS is 10 disks (8+2).

Whereas a RAID 6 volume or LUN for LDISKFS will be used as an individual object storage target, ZFS OSTs will be created from a single dataset per zpool. The composition of the zpool in terms of the vdevs does require experimentation to find the optimal arrangement balancing capacity utilization and performance.

Each OST zpool must contain a minimum of one RAIDZ2 vdev, but may contain many, creating a stripe of RAIDZ2 vdevs. This arrangement, analogous to a RAID 6+0 layout for non-ZFS storage, can yield good performance for ZFS when compared to a pool containing a single RAIDZ2 vdev. Again, experimentation is essential in order to drive the best performance from the hardware.

In theory, when determining the best configuration for performance, the same layout used for LDISKFS RAID6 (namely 10 disks, 8+2) should also apply to a ZFS RAIDZ2 vdev, but this is not always the case. While LDISKFS OSDs with RAID-6 usually require 8+2 (or some other power of 2 layout) for best performance, ZFS + RAID-Z2 is much more flexible and the RAID geometry should be chosen to best match the JBOD enclosure (also taking spare devices into account). For example, a benchmarking study conducted by Intel® Corporation (Figure 2-3-4) found that using an 11- or 12-disk RAIDZ2 vdev configuration can yield better overall throughput results than a 10-disk allocation, particularly when working with large I/O block sizes. This data is based on ZFS version 0.6.5, and it is to be expected that as ZFS development continues, performance characteristics will continue to improve for different configurations.

Experimenting with different RAIDZ layouts, such as using 11 or 12 disks for RAIDZ2 as well as the more conventional or traditional 10 disk configuration, is therefore recommended in order to identify the optimum structure for achieving strong performance.

Note:

obdfilter-survey write benchmark on one single OST using 8 objects and 64 threads. Comparison of 4 different ZFS pool layouts: 8+2, 9+2, 10+2 and 12+2. The primary Y axis measures global performance, the secondary Y axis measures the efficiency of a single disk, using only the active disks (8/9/10/12) for the calculation. The test was performed on a 60-HDD JBOD.