Understanding Lustre Internals: Difference between revisions

| Line 68: | Line 68: | ||

future. | future. | ||

[[File:Lustre_components.png| | [[File:Lustre_components.png|500px|thumb|Figure 1. Lustre file system components in a basic cluster]] | ||

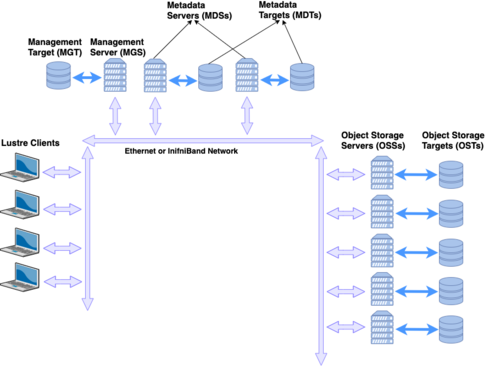

Figure 1 shows a simplified version of the Lustre file system components in a basic cluster. In this figure, the MGS server is distinct from the MDS servers, but for small file systems, the MGS and MDS may be combined into a single server and the MGT may coexist on the same block device as the primary MDT. | |||

=== Lustre File Layouts === | |||

Lustre stores file data by splitting the file contents into chunks and | |||

then storing those chunks across the storage targets. By spreading the | |||

file across multiple targets, the file size can exceed the capacity of | |||

any one storage target. It also allows clients to access parts of the | |||

file from multiple Lustre servers simultaneously, effectively scaling up | |||

the bandwidth of the file system. Users have the ability to control many | |||

aspects of the file’s layout by means of the "lfs setstripe" command, | |||

and they can query the layout for an existing file using the | |||

"lfs getstripe" command. | |||

File layouts fall into one of two categories: | |||

# Normal / RAID0 - File data is striped across multiple OSTs in a round-robin manner. | |||

# Composite - Complex layouts that involve several components with potentially different striping patterns. | |||

==== Normal (RAID0) Layouts ==== | |||

A normal layout is characterized by a stripe count and a stripe size. | |||

The stripe count determines how many OSTs will be used to store the file | |||

data, while the stripe size determines how much data will be written to | |||

an OST before moving to the next OST in the layout. As an example, | |||

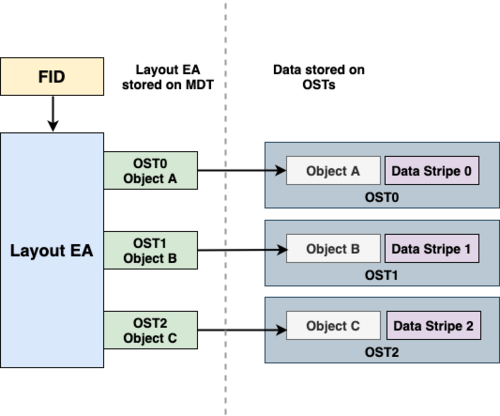

consider the file layouts shown in Figure 2 for a simple file system with 3 OSTs | |||

residing on 3 different OSS nodes edit. Note that Lustre indexes the | |||

OSTs starting at zero. | |||

[[File:layoutEA.png|500px|thumb|Figure 2. Normal RAID0 file striping in Lustre]] | |||

File A has a stripe count of three, so it will utilize all OSTs in the | |||

file system. We will assume that it uses the default Lustre stripe size | |||

of 1MB. When File A is written, the first 1MB chunk gets written to | |||

OST0. Lustre then writes the second 1MB chunk of the file to OST1 and | |||

the third chunk to OST2. When the file exceeds 3 MB in size, Lustre will | |||

round-robin back to the first allocated OST and write the fourth 1MB | |||

chunk to OST0, followed by OST1, etc. This illustrates how Lustre writes | |||

data in a RAID0 manner for a file. It should be noted that although File | |||

A has three chunks of data on OST0 (chunks #1, #4, and #7), all these | |||

chunks reside in a single object on the backend file system. From | |||

Lustre’s point of view, File A consists of three objects, one per OST. | |||

Files B and C show layouts with the default Lustre stripe count of one, | |||

but only File B uses the default stripe size of 1MB. The layout for File | |||

C has been modified to use a larger stripe size of 2MB. If both File B | |||

and File C are 2MB in size, File B will be treated as two consecutive | |||

chunks written to the same OST whereas File C will be treated as a | |||

single chunk. However, this difference is mostly irrelevant since both | |||

files will still consist of a single 2MB object on their respective | |||

OSTs. | |||

Revision as of 13:05, 16 November 2021

Lustre Architecture

What is Lustre?

Lustre is a GNU General Public licensed, open-source distributed parallel file system developed and maintained by DataDirect Networks (DDN). Due to the extremely scalable architecture of the Lustre file system, Lustre deployments are popular in scientific supercomputing, as well as in the oil and gas, manufacturing, rich media, and finance sectors. Lustre presents a POSIX interface to its clients with parallel access capabilities to the shared file objects. As of this writing, Lustre is the most widely used file system on the top 500 fastest computers in the world. Lustre is the file system of choice on 7 out of the top 10 fastest computers in the world today, over 70% of the top 100, and also for over 60% of the top 500.[1]

Lustre Features

Lustre is designed for scalability and performance. The aggregate storage capacity and file system bandwidth can be scaled up by adding more servers to the file system, and performance for parallel applications can often be increased by utilizing more Lustre clients. Some practical limits are shown in Table edit along with values from known production file systems.

Lustre has several features that enhance performance, usability, and stability. Some of these features include:

- POSIX Compliance: With few exceptions, Lustre passes the full POSIX test suite. Most operations are atomic to ensure that clients do not see stale data or metadata. Lustre also supports mmap() file IO.

- Online file system checking: Lustre provides a file system checker (LFSCK) to detect and correct file system inconsistencies. LFSCK can be run while the file system in online and in production, minimizing potential downtime.

- Controlled file layouts: The file layouts that determine how data is placed across the Lustre servers can be customized on a per-file basis. This allows users to optimize the layout to best fit their specific use case.

- Support for multiple backend file systems: When formatting a Lustre file system, the underlying storage can be formatted as either ldiskfs (a performance-enhanced version of ext4) or ZFS.

- Support for high-performance and heterogeneous networks: Lustre can utilize RDMA over low latency networks such as Infiniband or Intel OmniPath in addition to supporting TCP over commodity networks. The Lustre networking layer provides the ability to route traffic between multiple networks making it feasible to run a single site-wide Lustre file system.

- High-availability: Lustre supports active/active failover of storage resources and multiple mount protection (MMP) to guard against errors that may results from mounting the storage simultaneously on multiple servers. High availability software such as Pacemaker/Corosync can be used to provide automatic failover capabilities.

- Security features: Lustre follows the normal UNIX file system security model enhanced with POSIX ACLs. The root squash feature limits the ability of Lustre clients to perform privileged operations. Lustre also supports the configuration of Shared-Secret Key (SSK) security.

- Capacity growth: File system capacity can be increased by adding additional storage for data and metadata while the file system in online.

Lustre Components

Lustre is an object-based file system that consists of several components:

- Management Server (MGS) - Provides configuration information for the file system. When mounting the file system, the Lustre clients will contact the MGS to retrieve details on how the file system is configured (what servers are part of the file system, failover information, etc.). The MGS can also proactively notify clients about changes in the file system configuration and plays a role in the Lustre recovery process.

- Management Target (MGT) - Block device used by the MGS to persistently store Lustre file system configuration information. It typically only requires a relatively small amount of space (on the order to 100 MB).

- Metadata Server (MDS) - Manages the file system namespace and provides metadata services to clients such as filename lookup, directory information, file layouts, and access permissions. The file system will contain at least one MDS but may contain more.

- Metadata Target (MDT) - Block device used by an MDS to store metadata information. A Lustre file system will contain at least one MDT which holds the root of the file system, but it may contain multiple MDTs. Common configurations will use one MDT per MDS server, but it is possible for an MDS to host multiple MDTs. MDTs can be shared among multiple MDSs to support failover, but each MDT can only be mounted by one MDS at any given time.

- Object Storage Server (OSS) - Stores file data objects and makes the file contents available to Lustre clients. A file system will typically have many OSS nodes to provide a higher aggregate capacity and network bandwidth.

- Object Storage Target (OST) - Block device used by an OSS node to store the contents of user files. An OSS node will often host several OSTs. These OSTs may be shared among multiple hosts, but just like MDTs, each OST can only be mounted on a single OSS at any given time. The total capacity of the file system is the sum of all the individual OST capacities.

- Lustre Client - Mounts the Lustre file system and makes the contents of the namespace visible to the users. There may be hundreds or even thousands of clients accessing a single Lustre file sysyem. Each client can also mount more than one Lustre file system at a time.

- Lustre Networking (LNet) - Network protocol used for communication between Lustre clients and servers. Supports RDMA on low-latency networks and routing between heterogeneous networks.

The collection of MGS, MDS, and OSS nodes are sometimes referred to as the “frontend”. The individual OSTs and MDTs must be formatted with a local file system in order for Lustre to store data and metadata on those block devices. Currently, only ldiskfs (a modified version of ext4) and ZFS are supported for this purpose. The choice of ldiskfs or ZFS if often referred to as the “backend file system”. Lustre provides an abstraction layer for these backend file systems to allow for the possibility of including other types of backend file systems in the future.

Figure 1 shows a simplified version of the Lustre file system components in a basic cluster. In this figure, the MGS server is distinct from the MDS servers, but for small file systems, the MGS and MDS may be combined into a single server and the MGT may coexist on the same block device as the primary MDT.

Lustre File Layouts

Lustre stores file data by splitting the file contents into chunks and then storing those chunks across the storage targets. By spreading the file across multiple targets, the file size can exceed the capacity of any one storage target. It also allows clients to access parts of the file from multiple Lustre servers simultaneously, effectively scaling up the bandwidth of the file system. Users have the ability to control many aspects of the file’s layout by means of the "lfs setstripe" command, and they can query the layout for an existing file using the "lfs getstripe" command.

File layouts fall into one of two categories:

- Normal / RAID0 - File data is striped across multiple OSTs in a round-robin manner.

- Composite - Complex layouts that involve several components with potentially different striping patterns.

Normal (RAID0) Layouts

A normal layout is characterized by a stripe count and a stripe size. The stripe count determines how many OSTs will be used to store the file data, while the stripe size determines how much data will be written to an OST before moving to the next OST in the layout. As an example, consider the file layouts shown in Figure 2 for a simple file system with 3 OSTs residing on 3 different OSS nodes edit. Note that Lustre indexes the OSTs starting at zero.

File A has a stripe count of three, so it will utilize all OSTs in the file system. We will assume that it uses the default Lustre stripe size of 1MB. When File A is written, the first 1MB chunk gets written to OST0. Lustre then writes the second 1MB chunk of the file to OST1 and the third chunk to OST2. When the file exceeds 3 MB in size, Lustre will round-robin back to the first allocated OST and write the fourth 1MB chunk to OST0, followed by OST1, etc. This illustrates how Lustre writes data in a RAID0 manner for a file. It should be noted that although File A has three chunks of data on OST0 (chunks #1, #4, and #7), all these chunks reside in a single object on the backend file system. From Lustre’s point of view, File A consists of three objects, one per OST. Files B and C show layouts with the default Lustre stripe count of one, but only File B uses the default stripe size of 1MB. The layout for File C has been modified to use a larger stripe size of 2MB. If both File B and File C are 2MB in size, File B will be treated as two consecutive chunks written to the same OST whereas File C will be treated as a single chunk. However, this difference is mostly irrelevant since both files will still consist of a single 2MB object on their respective OSTs.